Continuation of the second part, this time we want to test VRF-lite.

Again, I am following the author post but adapting it to my environment using libvirt instead of VirtualBox and Debian10 as VM. All my data is here.

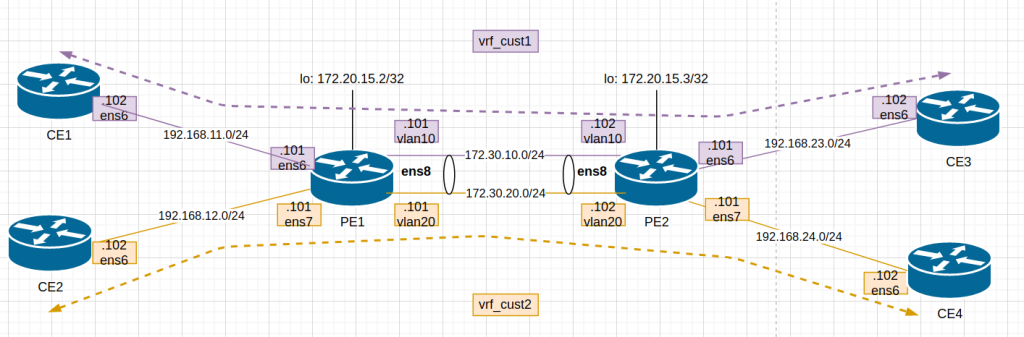

This is the diagram adapted to my lab:

After updating Vagrantfile and provisioning script, I “vagrant up”. The 6 VMs dont take long to boot up so it is a good thing.

The provisioning script is mainly for configuration of PE1 and PE2 . This is a bit more detail:

# enabling ipv4 forwarding (routing)

sudo sysctl net.ipv4.ip_forward=1

# add loopback (not used in lab3)

sudo ip addr add 172.20.5.$self/32 dev lo

# removing ip in link between pe1-pe2 as we will setup a trunk with two vlans.

sudo ip addr del 192.168.66.10$self/24 dev ens8

# creating two vlans 10 (ce1,ce3) and 20 (ce2, ce4)

sudo ip link add link ens8 name vlan10 type vlan id 10

sudo ip link add link ens8 name vlan20 type vlan id 20

# assign IP to each vlan

sudo ip addr add 172.30.10.10$self/24 dev vlan10

sudo ip addr add 172.30.20.10$self/24 dev vlan20

# turn up each vlan as by default are down

sudo ip link set vlan10 up

sudo ip link set vlan20 up

# create two routing tables with a null route

sudo ip route add blackhole 0.0.0.0/0 table 10

sudo ip route add blackhole 0.0.0.0/0 table 20

# create two VRFs and assign one table (created above) to each one

sudo ip link add name vrf_cust1 type vrf table 10

sudo ip link add name vrf_cust2 type vrf table 20

# assign interfaces to the VRFs // ie. PE1:

sudo ip link set ens6 master vrf_cust1 // interface to CE1

sudo ip link set vlan10 master vrf_cust1 // interface to PE2-vlan10

sudo ip link set ens7 master vrf_cust2 // interface to CE2

sudo ip link set vlan20 master vrf_cust2 // interface to PE2-vlan20

# turn up VRFs

sudo ip link set vrf_cust1 up

sudo ip link set vrf_cust2 up

# add static route in each VRF routing table to reach the opposite CE

sudo ip route add 192.168.$route1.0/24 via 172.30.10.10$neighbor table 10

sudo ip route add 192.168.$route2.0/24 via 172.30.20.10$neighbor table 20

Check the status of the VRFs in PE1:

vagrant@PE1:/vagrant$ ip link show type vrf

8: vrf_cust1: mtu 65536 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether c6:b8:f2:3b:53:ed brd ff:ff:ff:ff:ff:ff

9: vrf_cust2: mtu 65536 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 62:1c:1d:0a:68:3d brd ff:ff:ff:ff:ff:ff

vagrant@PE1:/vagrant$

vagrant@PE1:/vagrant$ ip link show vrf vrf_cust1

3: ens6: mtu 1500 qdisc pfifo_fast master vrf_cust1 state UP mode DEFAULT group default qlen 1000

link/ether 52:54:00:6f:16:1e brd ff:ff:ff:ff:ff:ff

6: vlan10@ens8: mtu 1500 qdisc noqueue master vrf_cust1 state UP mode DEFAULT group default qlen 1000

link/ether 52:54:00:33:ab:0b brd ff:ff:ff:ff:ff:ff

vagrant@PE1:/vagrant$

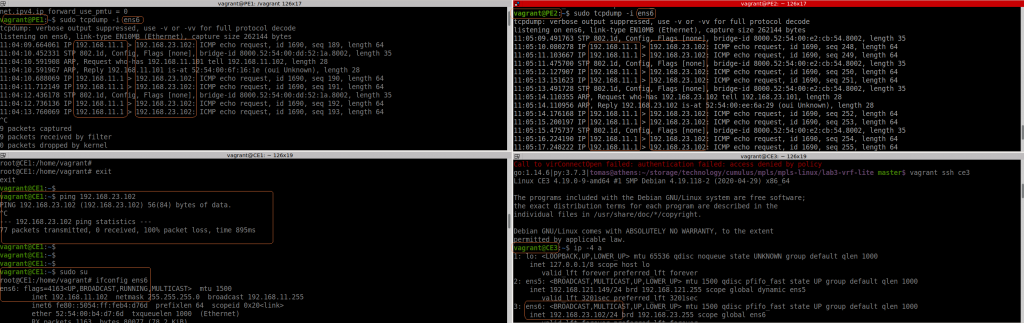

So let’s test if we can ping from CE1 to CE3:

Ok, if fails. I noticed that PE1 sees the packet from CE1… but the source IP is not the expected one (11.1 is the host/my laptop). And the packet reaches to PE2 with the same wrong source IP and then to CE3. In CE3 the ICMP reply is sent to 11.1, to it never reaches CE1.

The positive thing is that VRF lite seems to work.

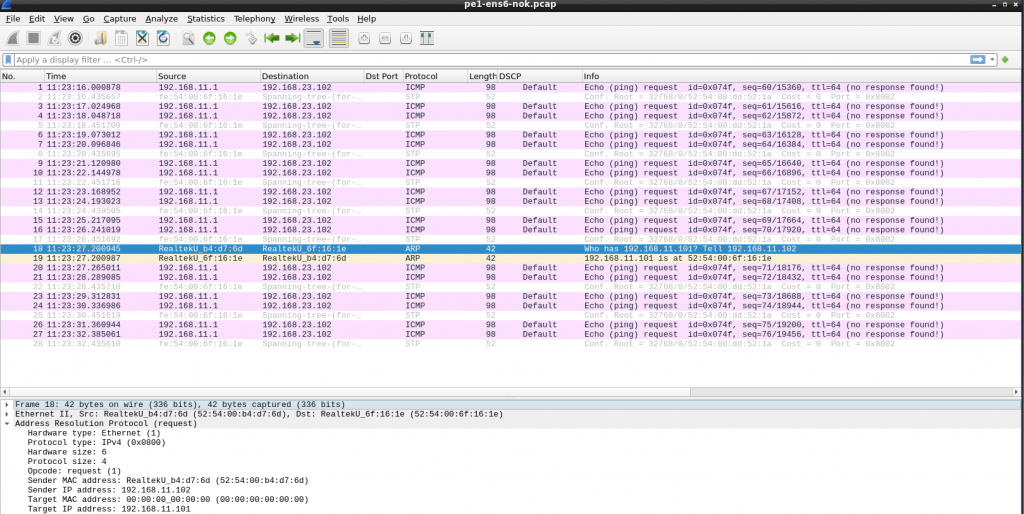

I double checked all IPs, routing, etc. duplicated MAC in CE1 and my laptop maybe??? I installed “net-tools” to get “arp” command and check the arp table contents in CE1. Checking the ARP request in wireshark, all was good.

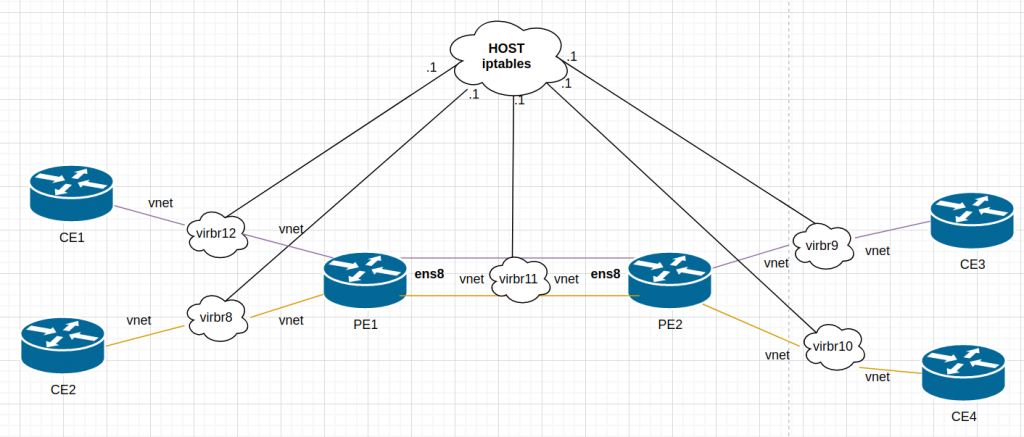

Somehow, the host was getting involved…. Keeping in mind that this is a simulated network, the host has access to all “links” in the lab. Libvirt creates a bridge (switch) for each link and it adds a vnet (port) for each VM that uses it:

# brctl show

bridge name bridge id STP enabled interfaces

virbr10 8000.525400b747b0 yes vnet27

vnet30

virbr11 8000.5254006e5a56 yes vnet23

vnet31

virbr12 8000.525400dd521a yes vnet19

vnet21

virbr3 8000.525400a38db1 yes vnet16

vnet18

vnet20

vnet24

vnet26

vnet28

virbr8 8000.525400de61f2 yes vnet17

vnet22

virbr9 8000.525400e2cb54 yes vnet25

vnet29

“.1” is always the host but It was clear my routing was correct in all devices. I remembered that I had some issues during the summer when I was playing with containers/docker and doing some routing…. so I checked iptables….

I didnt have iptables in the VMs… but as stated earlier, the host is connected to all “links” used between the VMs. There is no real point-to-point link.

# iptables -t nat -vnL --line-numbers

...

Chain LIBVIRT_PRT (1 references)

num pkts bytes target prot opt in out source destination

1 11 580 RETURN all -- * * 192.168.11.0/24 224.0.0.0/24

2 0 0 RETURN all -- * * 192.168.11.0/24 255.255.255.255

3 0 0 MASQUERADE tcp -- * * 192.168.11.0/24 !192.168.11.0/24 masq ports: 1024-65535

4 40 7876 MASQUERADE udp -- * * 192.168.11.0/24 !192.168.11.0/24 masq ports: 1024-65535

5 16 1344 MASQUERADE all -- * * 192.168.11.0/24 !192.168.11.0/24

6 15 796 RETURN all -- * * 192.168.24.0/24 224.0.0.0/24

7 0 0 RETURN all -- * * 192.168.24.0/24 255.255.255.255

8 0 0 MASQUERADE tcp -- * * 192.168.24.0/24 !192.168.24.0/24 masq ports: 1024-65535

9 49 9552 MASQUERADE udp -- * * 192.168.24.0/24 !192.168.24.0/24 masq ports: 1024-65535

10 0 0 MASQUERADE all -- * * 192.168.24.0/24 !192.168.24.0/24

# iptables-save -t nat

# Generated by iptables-save v1.8.7 on Sun Feb 7 12:06:09 2021

*nat

:PREROUTING ACCEPT [365:28580]

:INPUT ACCEPT [143:14556]

:OUTPUT ACCEPT [1617:160046]

:POSTROUTING ACCEPT [1390:101803]

:DOCKER - [0:0]

:LIBVIRT_PRT - [0:0]

-A PREROUTING -m addrtype --dst-type LOCAL -j DOCKER

-A OUTPUT ! -d 127.0.0.0/8 -m addrtype --dst-type LOCAL -j DOCKER

-A POSTROUTING -s 172.17.0.0/16 ! -o docker0 -j MASQUERADE

-A POSTROUTING -s 172.18.0.0/16 ! -o br-4bd17cfa19a8 -j MASQUERADE

-A POSTROUTING -s 172.19.0.0/16 ! -o br-43481af25965 -j MASQUERADE

-A POSTROUTING -j LIBVIRT_PRT

-A POSTROUTING -s 192.168.122.0/24 -d 224.0.0.0/24 -j RETURN

-A POSTROUTING -s 192.168.122.0/24 -d 255.255.255.255/32 -j RETURN

-A POSTROUTING -s 192.168.122.0/24 ! -d 192.168.122.0/24 -p tcp -j MASQUERADE --to-ports 1024-65535

-A POSTROUTING -s 192.168.122.0/24 ! -d 192.168.122.0/24 -p udp -j MASQUERADE --to-ports 1024-65535

-A POSTROUTING -s 192.168.122.0/24 ! -d 192.168.122.0/24 -j MASQUERADE

-A DOCKER -i docker0 -j RETURN

-A DOCKER -i br-4bd17cfa19a8 -j RETURN

-A DOCKER -i br-43481af25965 -j RETURN

-A LIBVIRT_PRT -s 192.168.11.0/24 -d 224.0.0.0/24 -j RETURN

-A LIBVIRT_PRT -s 192.168.11.0/24 -d 255.255.255.255/32 -j RETURN

-A LIBVIRT_PRT -s 192.168.11.0/24 ! -d 192.168.11.0/24 -p tcp -j MASQUERADE --to-ports 1024-65535

-A LIBVIRT_PRT -s 192.168.11.0/24 ! -d 192.168.11.0/24 -p udp -j MASQUERADE --to-ports 1024-65535

-A LIBVIRT_PRT -s 192.168.11.0/24 ! -d 192.168.11.0/24 -j MASQUERADE

-A LIBVIRT_PRT -s 192.168.24.0/24 -d 224.0.0.0/24 -j RETURN

-A LIBVIRT_PRT -s 192.168.24.0/24 -d 255.255.255.255/32 -j RETURN

-A LIBVIRT_PRT -s 192.168.24.0/24 ! -d 192.168.24.0/24 -p tcp -j MASQUERADE --to-ports 1024-65535

-A LIBVIRT_PRT -s 192.168.24.0/24 ! -d 192.168.24.0/24 -p udp -j MASQUERADE --to-ports 1024-65535

-A LIBVIRT_PRT -s 192.168.24.0/24 ! -d 192.168.24.0/24 -j MASQUERADE

Ok, it seems the traffic form 192.168.11.0 to 192.168.23.0 is NAT-ed (masquerade in iptables). So makes sense that I see the traffic as 11.1 in PE1. Let’s remove that:

# iptables -t nat -D LIBVIRT_PRT -s 192.168.11.0/24 ! -d 192.168.11.0/24 -j MASQUERADE

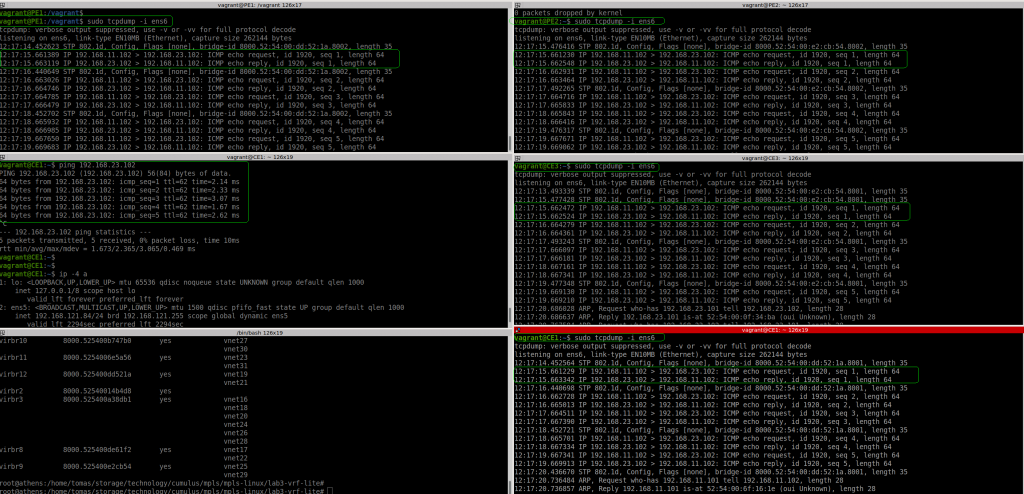

Test again pinging from CE1 to CE3:

So it works properly, we can see the the correct IPs in every hop: PE1, PE2 and CE3.

So it seems this is a built-in behaviour in libvirt. I need to find out how to “fix” this behaviour whenever I do “vagrant up”.