AI Labs Powers: Interesting articule for solutions to get power for AI infra

Colossus2: The tricks to get power between states… and cost.

Amazon Leo Architecture + Internet Edge ARC320: I didn’t know AWS was going to compete with Starlink… but having JB’s BlueHorizon, I guess it makes sense.

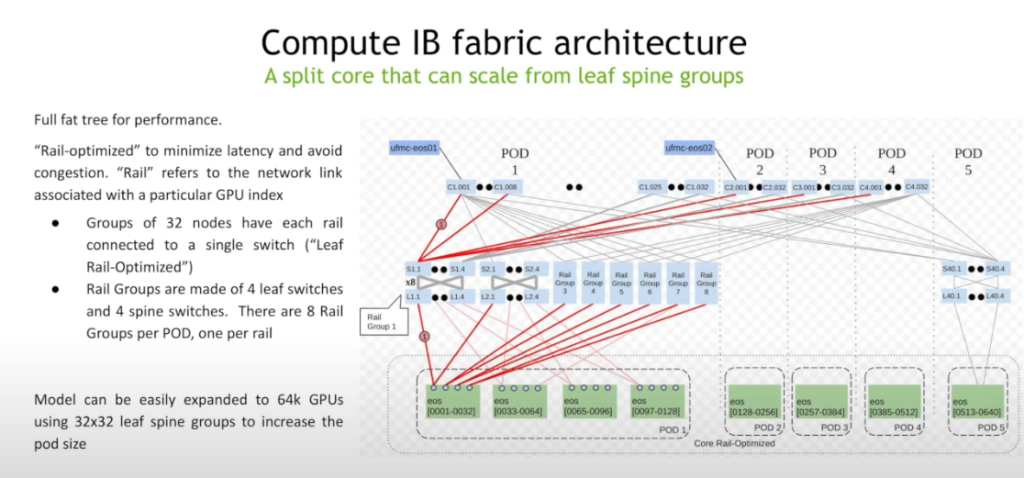

AWS Network Infra 2025 NET402: From minute 29 is the most interesting for me. Precast fiber duct banks, TE pre-signalled bypass IP tunnels for each path (without RSVP-TE?) and constant recalculation. UltraCluster3, connector improvements (36% reduce link failures! 76% reduce time for cabling!). UltraSwitch with dynamic LB and adaptive routing (like IB and UltraEthernet)

AWS DynamoDB outage lessons learnt

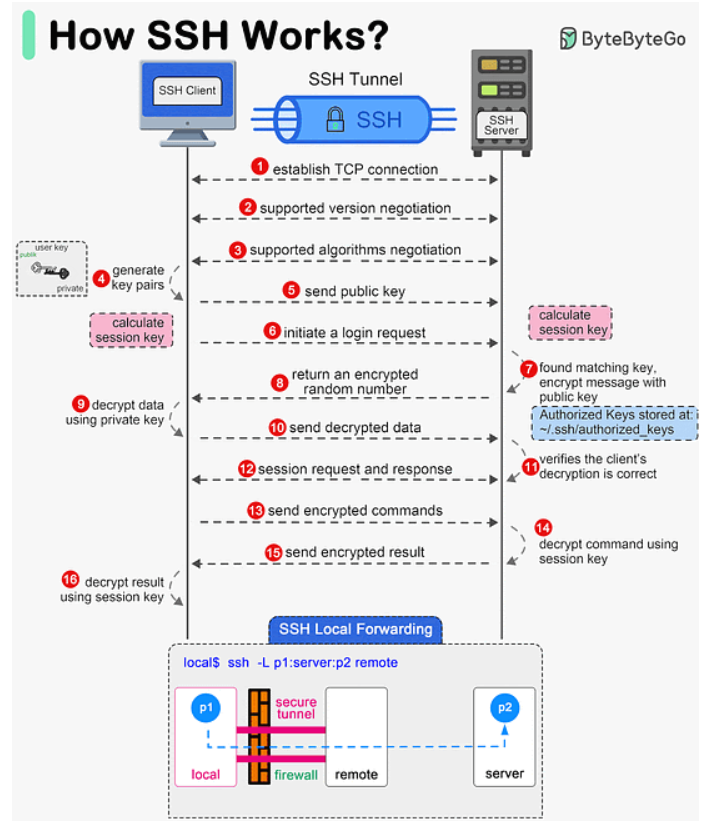

Kubernetes pod networking: Good refresher

Veritasium: Why ASML is so critical.

Veritasium: Power Laws: I think I get it… but it is scary: forest fires, sanpiles, money, investment…

Extreme success, you can’t be a balanced person: I like this format of smaller conversations. It is difficult to find time for 1h+ videos

Google DeepMind: I like the history behind DeepMind and his founder. I didnt know they tried to beat the Go champion in China…. and disconnected.

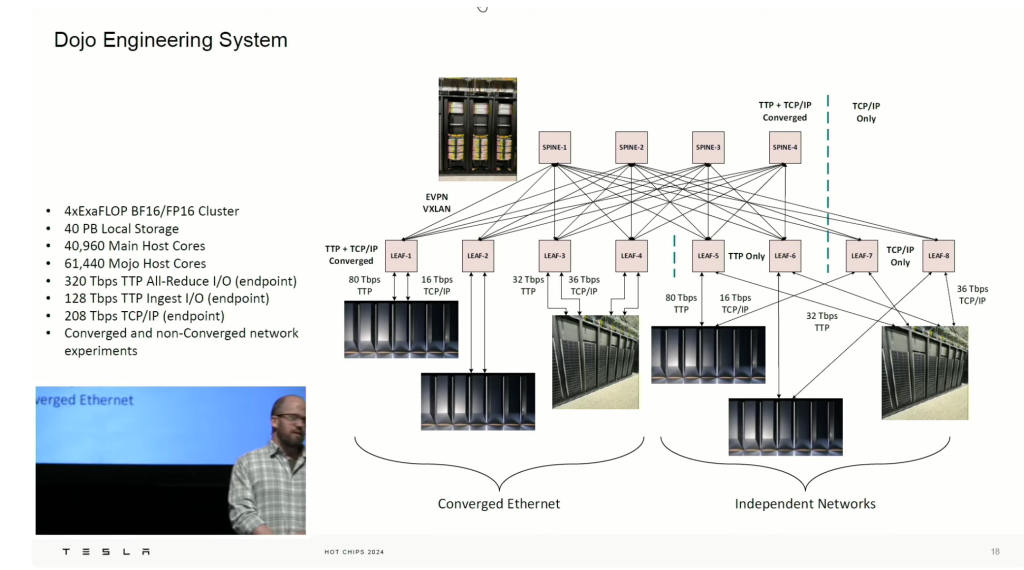

HotChips2025:

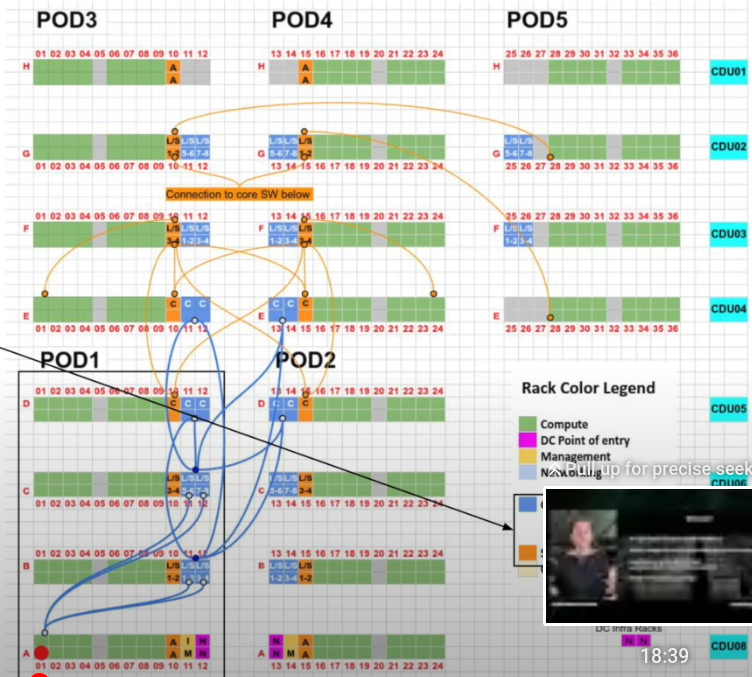

- Datacenter Racks part2:

- Nice the NVIDIA presentation from a mechanical engineer.

- Meta Catalina pod 33:39 – 4xracks for liquid cooling! for 2xIT racks. 42:39 3 networks: frontend (N-S), backend(E-W) and management/console. Leaking monitoring

- Google TPU rack Ironwood: I need to research the 3D Torus connection. 1h15m16

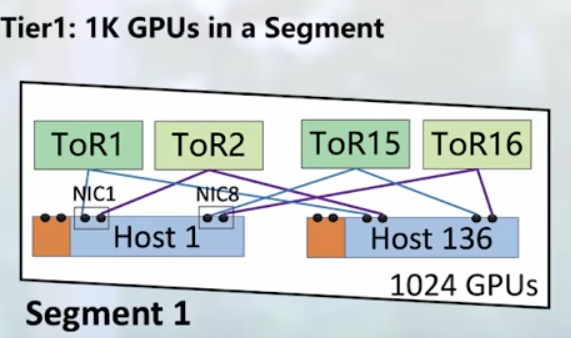

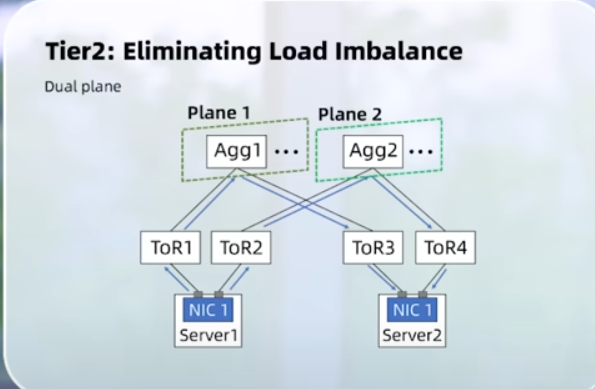

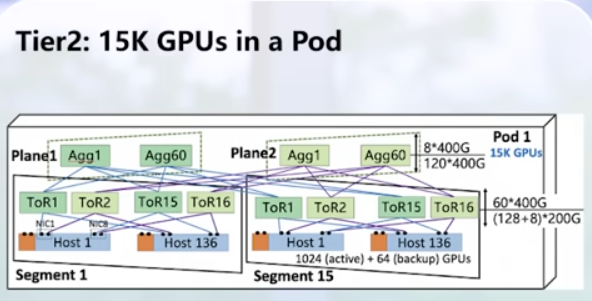

- Networking:

- Intel IPU E220: This is a NIC although I wasn’t 100% sure until I checked in another site. You can use P4

- AMD Pensando Pollara 400 (NIC). P4 architecture. UltraEthernet ready. 48:36 95% network utilization (intelligent LB, congestion mgmt (RTT-based), fast failover and loss recovery (select ACK)= the trinity)

- NVIDIA ConnectX-8 SuperNIC: 1h13m multiplane – as I understand, you use more leaf switches, in the example 4 planes = 4 leaf, and you have 64x gpu scale

switch radix 512, gpu 8x100G, two-level non-blocking

standard nic 2-level non-blocking: 64×32=2k

connect8-x multiplane: 256×512=128k

Spectrum-X: network round-trip time: 5-10us, packet transmisson 2ns

demo 1h24m (with grafana!)

NVIDIA buys Groq: interesting details between SRAM vs HBM. And it seems the key is “assembly line architecture”

Polyglot: no magic tricks. I need more exposure.