So NVIDIA has an AI supercomputer via this. Meta, Google and MS making comments about it. And based on this, it is a 24 racks setup using 900GBps NVLink-C2C interface, so no ethernet and no infiniband. Here, there is a bit more info about NVLink:

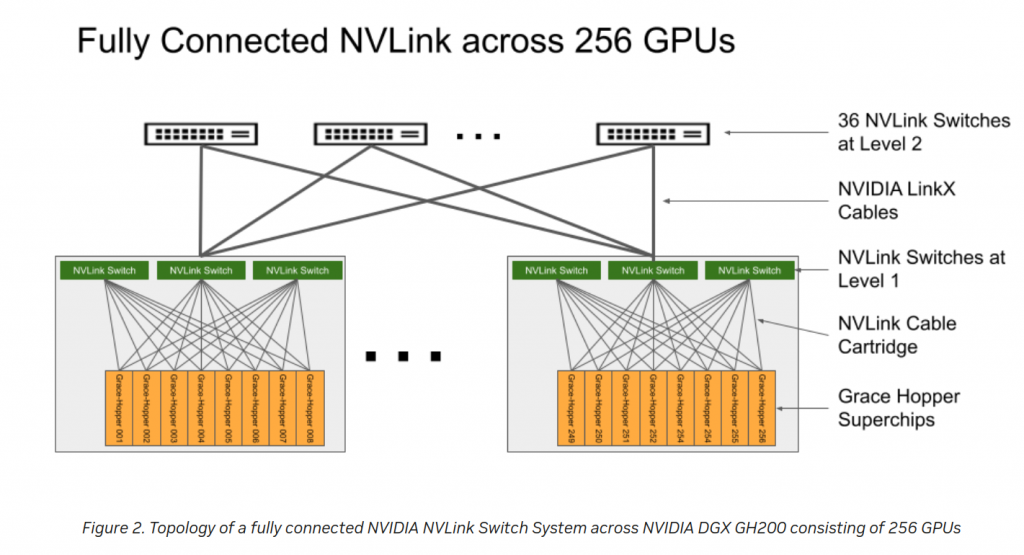

NVLink Switch System forms a two-level, non-blocking, fat-tree NVLink fabric to fully connect 256 Grace Hopper Superchips in a DGX GH200 system. Every GPU in DGX GH200 can access the memory of other GPUs and extended GPU memory of all NVIDIA Grace CPUs at 900 GBps.

This is the official page for NVlink but only with the above I understood this is like a “new” switching infrastructure.

But looks like if you want to connect up those supercomputers, you need to use infiniband. And again power/cooling is a important subject.