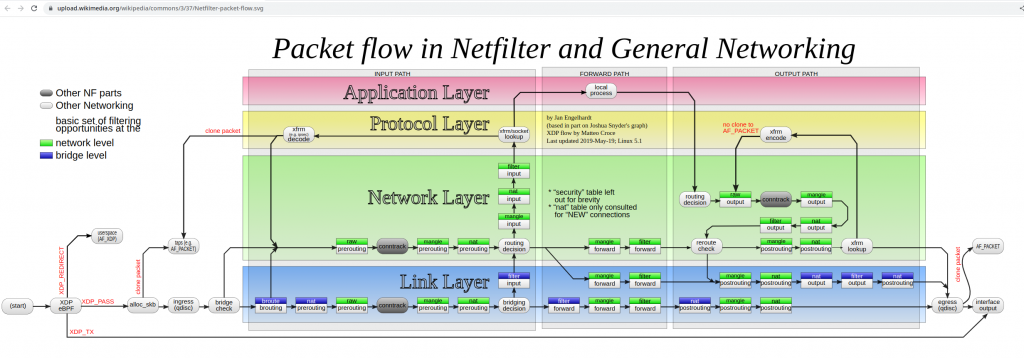

Today I had to troubleshoot a websocket issue. I had never dealt with this before. I was told that HAproxy config was fine that it was to be our NGFW doing something nasty at L7.

The connection directly to the server doing websocket was fine from my PC but for some requirement we need to put that server behing a HAproxy. From my PC to the haproxy that is doing “proxy” fore the websocket service failed…

Funny enough HAproxy and the websocket service were running in the same host.

As usual I took a look at the firewall logs. Nothing wrong there at first sight. I took a tcpdump from my pc when connecting to the websocket service and to the haproxy.

The service is very verbose and it is difficult to follow in the capture files as it spawns several connections. I went to the easy part, the capture to the haproxy was showing a lot of TCP retransmissions… The other trace to the websocket service was pretty clean.

Taking into account that the path from my PC to the haproxy server is the always the same (and I was going through a VPN) I could think it was a NGFW issue or something between HAproxy and the websocket service (that is a localhost connection).

As well, I was seeing weird things latency wise. Some TCP resets were taking more than 200ms to arrive to the server when the average RTT was 3ms.

I tried to take a tcpdump between the haproxy service and the websocket service just in case that packet loss was caused locally. The capture was chaos to follow. I had to understand better the sessions in HAproxy.

I changed direction and I went to the NGFW and created a rule that disabled any fancy security check for me to the haproxy server. I wanted to be sure the firewall was innocent.

It was. Same issue. I tried different browsers and always the same.

So I was nearly sure the problem was in HAproxy but I had to prove it. I kind of failed checking the backend connection (haproxy to websockt proxy) so I took again a look to the trace from my pc to haproxy. I was quite frustrated because there was so many connetions openned and then retransmissions started happening that I couldnt really see any problem.

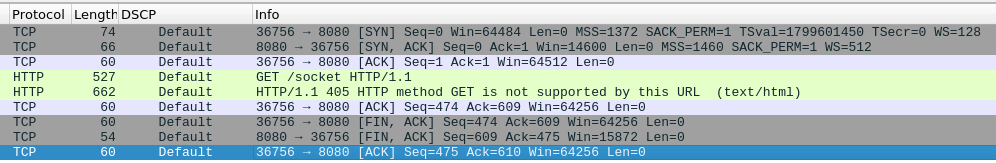

By luck, I noticed that in the good trace (the one going directly to the websocket service) I could see a HTTP GET request for “socket” from my PC. Keep in mind that I have no idea how websocket works. I tried to find a similar request in the haproxy trace, and I saw the problem….

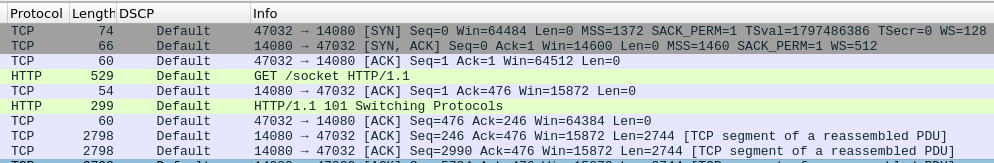

and this is a good connection:

So at the end, HAproxy was at fault (we dont know how to fix it though yet) and my firewall (for once) it is innocent.

The summary, I got overwhelmed by the TCP retransmissions. I was lucky that I saw the GET socket and I assumed that had to be the way to get the websocket connection established. So I should have started investigating how a websocket connections is stablished. As well, I didnt manage to find the HAproxy logs, I am pretty sure I should have found the same answer. So I need to learn to check that.

I learned something new. As usual, it didnt come easy neither quick 🙂