VXLAN/EVPN is a technology that I am trying to understand in more detail and depth since I started my current job. All my networking theory/knowledge comes from books so this one is a good base. Keep in mind that is a bit “old” as it was released on 2017. In the last months I have built my confidence with VXLAN/EVPN via some issues and testing designs (Arista EVPN L3 Gateway).

As I used to do in the past, I made notes of the book and I will put them here too so it is a good refresh.

1 INTRO

STP for DC issues:

- Convergence: tree recalculation

- Unused links: follow tree…

- Suboptimal forwarding: follow tree…

- No ECMP

- Traffic storm (no TTL in L2)

- Scale: only 4k vlans (12 bits tag)

Leaf/Spine improvements as per above (Clos Network):

- Scalability

- Smple

- REsilience

- Efficience

- No oversubscription

- ECMP

- Deterministic latency

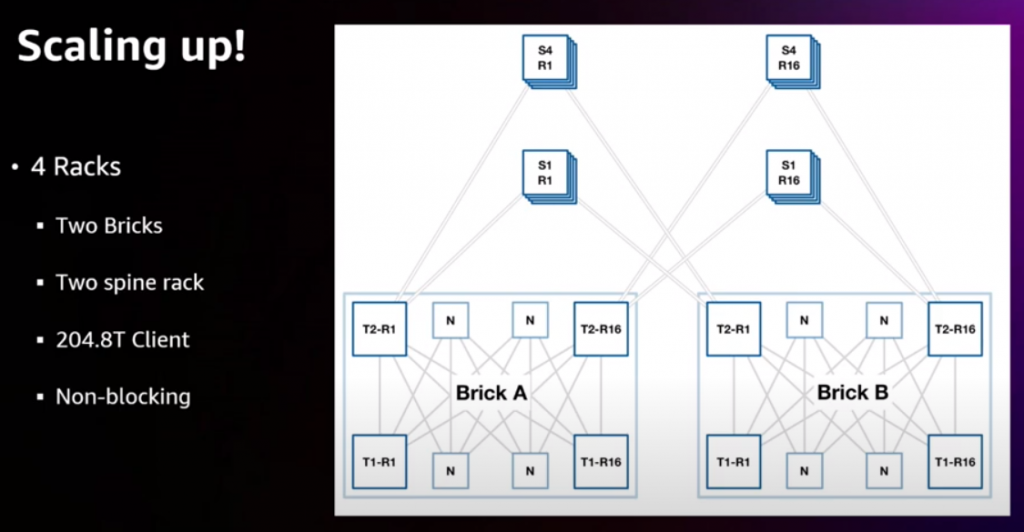

- scale out -> + leaves // scale up (+bw) -> + spines

BUM = Broadcast, Unknown Unicast, Multicast

Fabric Path = MAC in MAC (technology earlier to vxlan). Proprietary to Cisco

VXLAN = Standard, MAC in IP/UDP, VNI = 24 bits -> 16M valns! Flood & Learn (F&L): each network has its L2VNI + multicast group (control-plane). BGP EVPN doesnt need F&L, so better control-plane

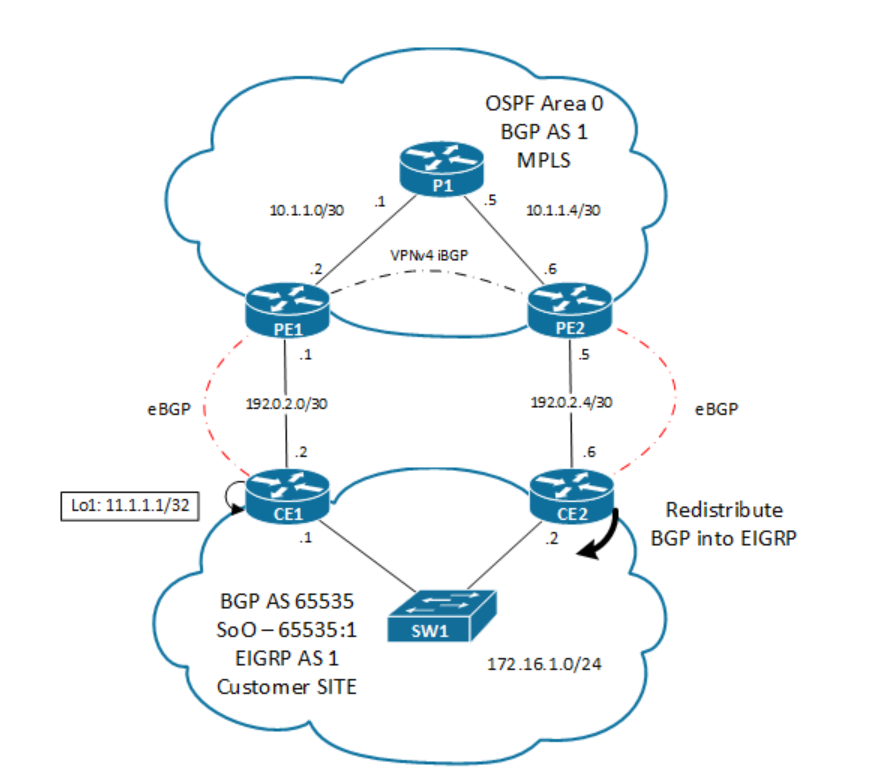

Border Leaf or Border Spine = for external connectivity.

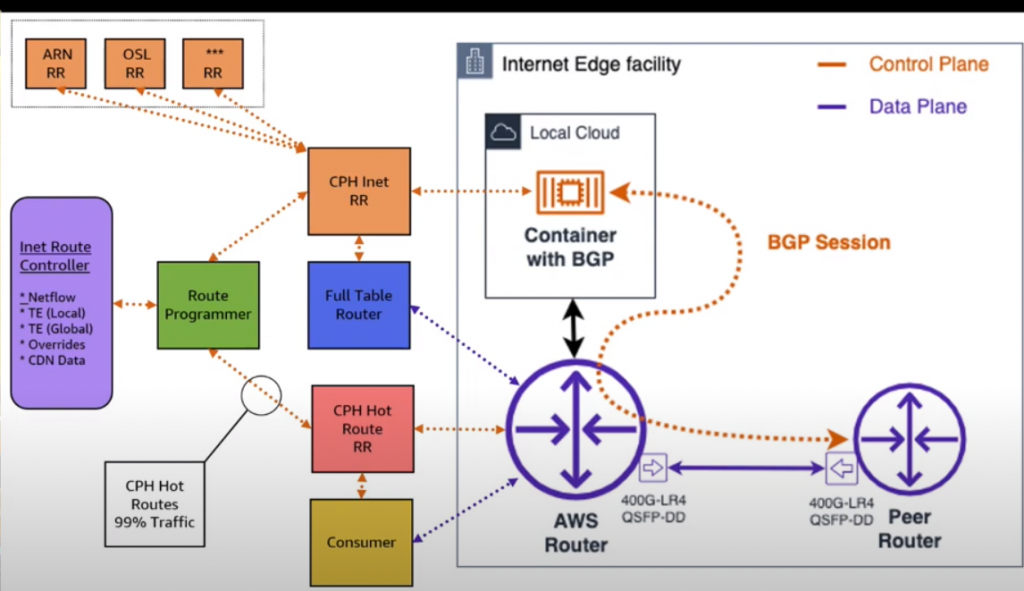

Route-Reflector (RR) or RP (multicast) in Spine

VXLAN – dataplane / EVPN – control-plane

2 BASICS

In DC, most traffic is east-west.

Limit vlan 12 bits (4k) + multi-tenancy? -> overlay: (indirection) abstraction of existing network tech + extend classic network capabilities. (David Wheeler: problem -> indirection)

Underlay -> increase MTU! (overlay overhead)

Handle BUM in underlay? Multicast (PIM – VNI mapped to multicas group = dst IP outer header) or Ingress Replication (head-end replication)

VTEP = Edge device, encap/decap, build overlay

VNI – Virtual Network ID

VXLAN header: original inner 802.1q header of l2 frame is removed and mapped to a VNI, to complete vxlan header. UDP dst port = 4789, src port = based on inner header

overhead = 50 bytes (14 (l2) +20 (l3) + 8 (l4) + 8 (vxlan) = 50). 54 bytes if optional 802.1q tag (4bytes) is added.

ECMP: 5-tuple: src IP, dst IP, proto, src port, dst port -> but only src port changes in vxlan -> that;s the entropy!

F&L: Doesnt scale, no control-plane. Multicast replication has a limit. So Ingress replication (IR) . Every VTEP must be aware of other VTEPS in same VNI. Source VTEP has to replicate each packet to each VTEP.

BGP EVPN: solution for F&L. Eliminates unnecessary flooding. EVPN carries host MAC, IP, network, VRF and VTEP info. If a VTEP that detects a host and doesnt send an EVPN update -> remote VTEP doesn’t age out entry for that host.

IMPORTANT! Broadcast traffic (ARP, DHCP, etc) is still flooding!!!

Tenat += VRF

eBGP = implicit next-hop-self for originated

RD = 8 bytes

— type 0: 2 byte ASN + 4 byte value

— type 1: 4 byte IP + 2 byte value

— type 2: 4 byte ASN + 2 byte value

RT: control import/export prefixes in VRF (auto derivation ASN:VNI)

VXLAN EVPN: RFC7432. Focus NVO (Network Virtualization Overlay) -> Route-Types:

— type 2: MAC/IP (host: /32 or /128). Sent once host is learnt. Info about IP is optional. Ext Community: RMAC = Router MAC = source VTEP.

— type 3: Inclusive multicast ethernet tag route -> create distribution list for IR. Generated and sent out immediately as a VNI is configured. Need ASIC support !!!

— type 5: IP prefix route (L3VNI)

“show bgp l2vpn evpn MAC” ->

[Route Type]:[Eth Segment ID]:[Eth Tag Id]:[MAC lenght]:[MAC]:[IP prefix]:[bit count]

bit count:

— 216: type2 only MAC

— 272: type2 IP/MAC

— 224: type5 IPv4

ExtCommunity: ENCAP:8 -> it is VXLAN

ARP request triggers an IP-MAC.

MAC learnt via BGP is not aged-out via normal process: only BGP delete message deletes the MAC

L3 learnt: depends on hw (FIB)

— HRT (Host Route Table): only for /32 or /128 (big)

— LPM (Longest Prefix Match): TCAM (small)

FIB: [Bridge Domain, RMAC] -> BD maps to L3VPN and RMAC maps to dst VTEP MAC

Type5:

— advertises first-hop-routing: prefix where VTEP is default gateway (IP anycast gateway)

— advertise prefixes from other protocols

Host detection:

— ARP aging = 1500 sec -> If ARP request fails -> type2 deletes are sent. ** Even when ARP entry is deleted, MAC only type2 is still in BGP EVPN CP until MAC aging expires (1800 sec) (sent BGP withdraw)

ARP aging < MAC aging -> avoid unnecessary flooding

Host mobility: VM to send GARP (gratuitous ARP): Highest MAC mobility seq ext community => Best

3 FORWARDING

- Handling BUM or multidestination traffic:

— MC replication in the underlay:

Use MC in UL => 1xL2VNI = 1xMC IP => problem: 2^24 VNI available -> is a stretch for MC IPs available, sw/hw limits (1000’s PIM, IGMP, etc) -> doesnt scale

How to manage VNI-MC mapping? VNI randomly assigned to MC or MC is localized for a set of VNIs.

— Ingress Replication (IR = HER = Head-End-Replication): Unicast mode. VTEP makes n-1 copies of BUM packet and send them as unicast to the n-1 VTEPs of that VNI

replication list? dynamic with BGP EVPN. type 3 (IMET). Replication list is updated when config of a L2VNI in a VTEP occurs –> Big overhead compared with MC.

— Use ARP snooping. ARP request -> populates BGP EVPN CP. 1) If VTEP knows dst MAC, then responds (this is ARP suppresion). If not, using IR or MC, sned ARP to all vTEP. Egress VTEP that has the host connected, receives ARP reply, makes a EVPN Typ2 announce to all VTEPs + send ARP reply (as unicast) to avoid any delay.

NX-OS uses MC for BUM by default = flood L2 locally and to all VTEPs in VNI.

MC group for overlay != MC group for underlay.

IGMP snoopnig (if supported), optional solution, it doesnt depend on hw, just sw.

- Distributed IP Anycast Gateway: Implemented at each VTEP, reduces traffic transit. Anycast = ne to the nearest association.

Anycast GW VTEPs share the same MAC -> prevent black-holing for host-mobility (AGM = Anycast GW MAC address). Same AGM is used in all default gw IPs -> no hair-pining.

- Integrated Routing and Bridging (IRB)

— Asymmetric:

bridge-route-bridge at local VTEP

traffic eggresing towards a remote VTEP uses a different VNI than the return traffic from the remote VTEP

requires consistent VNI config in all VTEPS

— Symmetric (NXOS):

bridge-route-route-bridge

egress and return use same L3VNI. L2VNI are not used for routing in symmetric IRB

Not all VNIs need to be configured in all VTEPS but for a VRF, L3VNI needs to be configured in all VTEPs.

Inter VRF routing -> route leaking -> external router or firewall.

BGP extended community = MAC mobility seq. Higher wins. With each move, seq++

End point move triggered by (update via BGP EVPN CP)

— Reverse ARP: only advertises new MAC

— Gratuitous ARP: adverts new MAC/IP

VTEP verifies if endpoint has actually moved.

- VPC: MCLAG + LACP: Cisco -> vPC: 2 devices: 1 peer link + 1 keepalive link.

— PIP: primary IP. individual per VPC member per VTEP

— VIP: secondary IP in nve interface. Virtual IP = anycast VTEP at VPC level. It is the next-hop used in EVPN typ2/5. ** anycast VTEP != anycast gw

–orphans: blackhole if using VIP -> solution: “advertise-pip” VPC members use PIP instead of VIP for NH in originated EVPN type5 (type2 still uses VIP)

— Router MAC ext community in typ2/5:

—- PIP uses switch RMAC

—- VIP uses local derived MAC based on VIP. Both VPC members derive the same MAC because the share the same VIP. As RMAC ext community is non-transitive and VIP are unique, no issue

- DHCP: discovery, offer, request, offer. DHCP relay: configured in default gw: relay agent uses default gw IP in the GiAdr field of DHCP payload. DHCP servers uses GiAdd field to find correct scope. As well, uses GiAddr as dst IP for the answer. Problem with anycast gw because all VTEP uses the same IP -> sol: each VTEP dhcp relay uses unique IP (lox) and must be routable. how to choose scope? DHCP option 92.

4 UNDERLAY

— Clos network = each port equidistant + consistent latency => multistage.

— MTU: vxlan -> avoid fragmentation. vlxan overhead = 50 bytes (14 outer MAC header + 20 outer IP header + 8 vxlan header — extra 4 if QinQ in VNI). Normal ethernet MTU 1500 -> Ethernet Frame = 1518 (or 1522 if 8021q) 18 = 6 MAC src + 6 MAC dst + 2 ether type + 4 FCS. If using vxlan => MTU 1450. If using jumbo frame 9000 => vxlan is 9050. Most network kit supports up to 9216 MTU

— IP Addressing: RID = lo. Use /31 or unnumbered (lo is used for RID) as much as possible. Lo0 (BGP) and Lo1 (VTEP) on IGP. Leaf = Lo0 + Lo1. Spine = only Lo0 because it is not vtep (if using multisite gateway need lo1 as it is vtep). Be sure your ip schema aggregates!!! -> reduce routing table (1x/24 all lo0, 1x/23 all p2p, etc)

— IGP is OSPF or BGP -> ECMP.

OSPF: use p2p type instead of broadcast -> only LSA-1 !!! low convergence time !!! and small LSDB. If ipv6 -> ospfv3 -> dual stack… two protocols!

ISIS: no IP, works on L2 (CNLS). SPF algo. TLV. NSAP addressing. IP independent.

BGP: path vector (no SPF) if eBGP -> next-hop unchanged (if spine not a vtep). underlay eBGP -> phy to phy // overlay eBGP -> lo to lo (multihop!). If eBGP “route reflector” => “retain router-target all”. If using “Two AS” design (if Spine no vtep) -> spine: ipv4 + evpn => “disable peer-as-check” // leaf: ipv4 + evpn => allowas-in

- Multicast Routing: more efficient than unicast but needs one extra protocol

— BUM traffic: unicast mode = ingress replication in underlay // multicast mode = use multicast in underlay.

— Unicast: VTEP host to generate n-1 copies of packet. Replication of data traffic is data plane operation. VTEP-VNI membership distribution is dynamic via CP BGP EVPN or static via FnL (doesnt scale!).

— Multicast: PIM Any Source Multicast ASM (PIM SM) or PIM BiDir (depens on hw). Can’t mix PIM modes. RP in Spines!

— PIM ASM Anycast RP: in each spine. 1 IP for all spines -> load balancing. 9S,G) at VTEP.

— PIM BiDIR: (*,G) at RP = Spines. Difference with Anycast, BiDir creates only a shared tree (*,G) on a per multicast group instead of creating a source tree (S,G) per VTEP per multicast group. Redundancy achieved with “phantom” RP that uses lo with different prefix length.

5 MULTITENANCY (L2-> vlan / L3 -> vrf)

- Bridge Domain: Broadcast domain that represents the scope of a L2 network (vlan). Way of stretching a vlan -> vlan (12bits), vni (24 bits), switch.

- VLANS in VXLAN: vlan local significant, vni is global significant (per switch, per port)

— L2VNI: RD -> RID: vlan+32767. RT -> autogenerate / AS:l2vni (RT+eBGP is manual at underlay)

— VLAN mode: restriction 4K to VNI mapping per switch.

vlan 10

vn-segment 30001

— Bridge domain mode: BD is used instead of vlan-mode. BD implements a BDI instead of a SVI. No retrictions of 4k VNI mapping -> hw restriction:

- VRF in VXLAN BGP EVPN: VRF-Lite doesnt scale. L3 at Leaf. EVPN -> scale CP -> RD+RT

- L3 Multitenancy: L3VNI global scope, vrf name is local significant. Auto: RD= RID:VRF_ID / RT= AS:L3VNI (RT+eBGP is manual at underlay)

— Summary: 1) Associate L3VNI into VTEP interface 2) core-vlan associated with L3VNI 3) SVI created in VRF

router bgp X

vrf VRF-A

addressing ipv4 unicast

advertise l2vpn evpn

---

interface nve1

member vni 50001 associate-vrf

---

vlan 2501

vni-segment 50001

---

interface vlan 2501

vrf member VRF-A

no shut

mtu 9216

ip forwarding

---

vrf context VRF-A

vni 50001

rd auto

address-family ipv4 unicast

route-target both auto

route-target both auto evpn

6 UNICAST FORWARDING

- Intra-Subnet Unicast Forwading (Bridging) (Classic Ethernet)

— ARP suppression disabled: ARP request -> BUM mode => Multicast or IR -> BGP EVPN for source MAC

— ARP suppression enabled: ARP snooping -> source MAC -> generated EVPN type2. If dst MAC is know by ingress VTEP then it generates ARP reply (ARP proxy)

— commands:

show bgp l2vpn evpn vni-id 30001

show l2route evpn mac all <--|-- verifies FIB is updated

show mac address-table vlan X <--|

// Anounce IP L3 GW manually

interface vlan 10

vrf X

ip address a/b tag 12345

---

route-map RM permit 10

match tag 12345

---

router bgp Z

vrf X

address-family ipv4 unicast

advertise l2vpn evpn

redistribute direct route-map RM

- Inter-subnet unicast forwarding (routing)

— Symmetric IRB (bridge-routing-routing-bridge): VXLAN-router traffic uses same L3VNI in each direction. VRF -> l3vni -> mapping in all VTEPs.

— Distributed IP Anycast GW: anycast GW MAC (AGM) It is a VTEP. local routing in a VTEP -> no vxlan is used.

— Distributed behind remote VTEP (routing) -> vxlan > inner MAC header (SMAC = VTEP1 router MAC / DMAC = VTEP2 router MAC). RMAC is encoded in BGP EVPN NLRI as extended -community.

— Silent Hosts:

— Dest IP unknown + dst bridge domain is local to ingress VTEP => IP lookup hits LPM (ie /24) -> because L3 distribution IP Anycast FW -> chose local route (lowest AD) -> trigger ARP request for dst IP (because unknow) in different VNI !! -> BUM forwarding -> reach other VTEP.

— No L2 extension present:

show bgp l2vpn vpn vni-id X <-- 1) verify BGP RIB

show bgp ip unicast vrf Y 2) verify RIB (RT worked fine)

show ip arp vrf Y 3) verify FIB

- Forwarding with dual-home endpoints: VPC -> anycast VTEP = VIP. Egress (outer src IP = VIP when traffic leaving ingress VETP). Ingress (outer dst IP = VIP when return traffic leaves egress VTEP -> ECMP to either of VTEP behind VIP)

— orphan: traffic may cross VPC peer-link because NH=VIP. L2/L3 announcements in VPC -> NH=VIP. If routing needed between VTEP1 and VTEP2 (both belong to same VPC) -> BGP or VRF-lite or advertise type5 with “PIP” from each VTEP instead of VIP (preferred)

- IPv6: Anycast GW MAC (AGM) is shared between ipv4/ipv6. Underlay only ipv4 -> overlay ipv6 communication => NH VTEP=ipv4.

7 MULTICAST FORWARDING

Handling MC in overlay.

EVPN Type3 -> (unicast is used to handle BUM) VTP announces interest in a L2VNI

Initially not VXLAN L2 MC without IGMP snooping => L2 MC flooded to all VTEPs in that VNI even if not interested.

- L2 MC forwarding = Intra-subnet MC. Same VNI = broadcast domain. In MC mode, underlay maps L2VNI to MC group.

— IGMP in VXLAN BGP EVPN:

— Classic IGMP snooping: Traffic is still flooded unconditionally as long as VTEPs are member of that VNI. MC is dropped at VTEPs egress.

— Improved IGMP snooping: “ip igmp snooping disable-nve-static-route-port” -> conditional addition of a VTEP to the Outgoing Interface List (OIL) for a given VNI.

- L2 MC forwarding in VPC: one of the two peers of VPC -> elected DF (lowest cost to RP). Election process: Both VPC peers send PIM join to RP using Anycast VTEP IP (secondary IP in lo1). RP sends only 1 reply to anycast IP, this is hashed to one VPC peer -> the peer with the (S,G) is the DF (S=VTEP anycast IP, G=MC VNI mapping)

- L3 MC forwarding = inter-subnet MC. Not much info, something expected in 2017.

8 EXTERNAL CONNECTIVITY

— Border Leaf: VTEP, few flows N-S. Extra hop. No end-points. SS doesnt ned to be a VTEP.

— Border Spine: Spine becomes VTEP. Most flows N-S

— Extended L3 connectivity (L3 handoff):

— Wiring:

—- Full mesh: most resilient, no require sync between border nodes.

—- U-shape: sync link between border nodes.

— VRF-Lite/Inter-AS opt-A: BGP + redist + summarization, 802.1q. VRF-Lite-> SVI (needs BFD), subinterface (recommended) + ebgp

— Extended L2 connectivity: End-point mobility -> RARP (non-IP)

- Classic Ethernet + VPC: VPC -> anycast VTEP IP (secondary IP in lo1) -> NH = anycast VIP (type2). “advertise pip” for type5 NH = VPC physical IP (primary lo1).

* BPDU not transported in VXLAN -> Use VPC between STP switch and VTEPs.

- Extranet + Shared Services: Internet, DNS, DHCP, etc.

— VRF route-leaking: tenant VRF <-> shared VRF (dhcp, dns, etc) -> route leaking: CP leaking at ingress VTEP, DP leaking at egress VTEP. VXLAN uses VNI associated with source VRF for remote traffic. Problem: force consistent config in VTEP with leaking. Scalability (asymmetric IRB)

— Downstream VNI assigment: egress VTEP dictates the VNI to be used by ingress VTEP with downstream VNI-assigment via CP

9 MULTIPOD, MULTIFABRIC, DCI

- OTV vs VXLAN: VXLAN frame similar to OTV. OTV is transport agnostic IP-based solution.

— OTV includes CP and DP. VXLAN only DP (it needs BGP EVPN for CP)

— OTV provides multihoming (redundancy) using DF on per VLAN, doesnt need VPC. VXLAN needs VPC to provide multihoming.

— OTV has loop prevention. VXLAN needs BPDU guards + storm control.

— ARP suppresion enabled in both. Unknown multicast is dropped in OTV. VXLAN+EVPN doesnt stop unknow unicast.

- Multipod: LS-SS + super spine layer. Prefix scale MAC/IP? Spine or Super-Spine needs to be BGP RR. MC -> escale Output Interface list (OIF). Max 65k LS. Single DP extends pod to pod = single fabric.

- Multifabric: Difference from multi-pod, complete segregation CP and DP -> interconnect at border -> stitching VNIs, -> DCI design.

- Interpod / Interfabric: Broadcast storm in overlay reaches all pods if L2 extended to all pods.

— opt-1: Multipod, single DP end to end. problem: failure domain, no separation pods (vxlan encap end to end)

— opt-2: Multifabric: DCI at border of fabric using classic Ethernet (VRF-lite + 802.1q). Better scale, MAC/IP not spread across all VTEPs (VXLAN encap only inside fabric). VXLAN ends at border device. Problem: DCI is bottleneck.

— opt-3: Multisite: option2 + re-originate L3 routing info (MPLS L3EVPN) VXLAN ends at border fabric -> DCI encap in MPLS -> other end removes MPLS and then back to VXLAN.

— opt-4: Multisite L2: option 3 for L2. OTV or EVPN. VNI-VNI stitching.

* Multiste EVPN VXLAN using BGW -> IETF draft-sharma-multi-site-evpn 2016

10 L4-7 SERVICES INTEGRATION

- Firewalls in VXLAN BGP EVPN:

— routing mode: use L3

— bridging mode: “bump in the wire”, VLAN stitching

— FW redundancy with static routing: ok if HA FW connected to same LS pair (VPC). If FW in different LS -> suboptimal routing -> 2 solutions: 1) static route tracking, 2) static route at remote LS -> static route in ALL LS that need to reach the FW -> LS will learn type2 of FW via active LS.

- Inter-Tenant / Tenant-Edge FW: security enforcement at edge/exit of a tenant/VRF. VRF stitching located at Border LS.

— Inra-tenant FW: E-W firewall = FW inside VRF.

— deployment:

—-FW route mode + default GW for all VLANs => VXLAN only at L2 => no VRFS, no anycast gw.

—- FW bridge mode: all network belong to same subnet. VXLAN + distributed IP anycast gw. FW connected to distributed IP anycast GW LS.

—- PBR: Policy-Base Routing

— Mixing intra-tenant and inter-tenant:

— Intra-tenant:

—-L2 (E-W): FW is GW. LS only extends L2 -> vxlan only l2, no distributed IP anycast gw. BL trunk to FW to extend L2.

—- L3: LS uses distributed IP anycast gw.

— Inter-tenant: default route pointing to FW -> redistribute via BGP EVPN

- Load Balancer: “statefull”

— one-arm source-NAT: LB connected with 1 link / PO to LS.

— Direct VIP subnet approach: LB VIP + LB physical IP in same range. VIP advertised via type2

— Indirect VIP subnet approach: needs static route (like FW example) -> type5.

— source-NAT -> client IP is hidden, servers return traffic to LB

— service chains: LB+FW: FW belongs to BL, LB belongs to Service Leaf. If 2-Arm LB -> VRF-transit between FW-LB. If 1-arm LB -> no transit-vrf, source NAT.

11 FABRIC MANAGEMENT

- POAP: out-of-ban (mgmt port) needs dhpc relayy. inband (front panel ports)

- NRFU

- OAM:

show mac addres-table

show l2route evpn mac all

show vlan id X vn-segment

show bgp l2vpn evpn vni-id Z

show bgp l2vpn evpn MAC

show ip arp vrf Y

show forwarding vrf Y adj

show forwarding up local-host-db vrf Y

show l2route evpn mac-ip all

show bgp l2vpn evpn IP

show ip route vrf Y IP

show nve internal bgp remote database

show nve peers detail

ping nve up unknown vrf X payload IP DST SRC port SRC DST proto 6 payload-end vni 50000 verbose

traceroute ...

pathtrace ...