BIND: Interesting links about BIND performance and the lab setup. DNS is the typical technology that looks straightforward but as soon as you dig a bit, it is a world itself

LACP: Interesting blog about troubleshooting details. As above, this is the typical tech that you give for granted that works but then, you need to really understand how it works to troubleshoot it. So I learned a bit (although the blog is “old”)

Chiplets: Very good blog. Explaining the origin of getting to chiplets. Interesting evolution and good touch to mention the network industry, and not just CPU/GPU.

As the process node shrank, manufacturing became more complex and expensive, leading to a higher cost per square millimeter of silicon. Die cost does not scale linearly with die area. The cost of the die more than doubles with doubling the die area due to reduced yields (number of good dies in a wafer).

Instead of packing more cores inside a large die, it may be more economical to develop medium-sized CPU cores and connect them inside the package to get higher core density at the package level. These packages with more than one logic die inside are called multi-chip modules (MCMs). The dies inside the multi-chip modules are often referred to as chiplets.

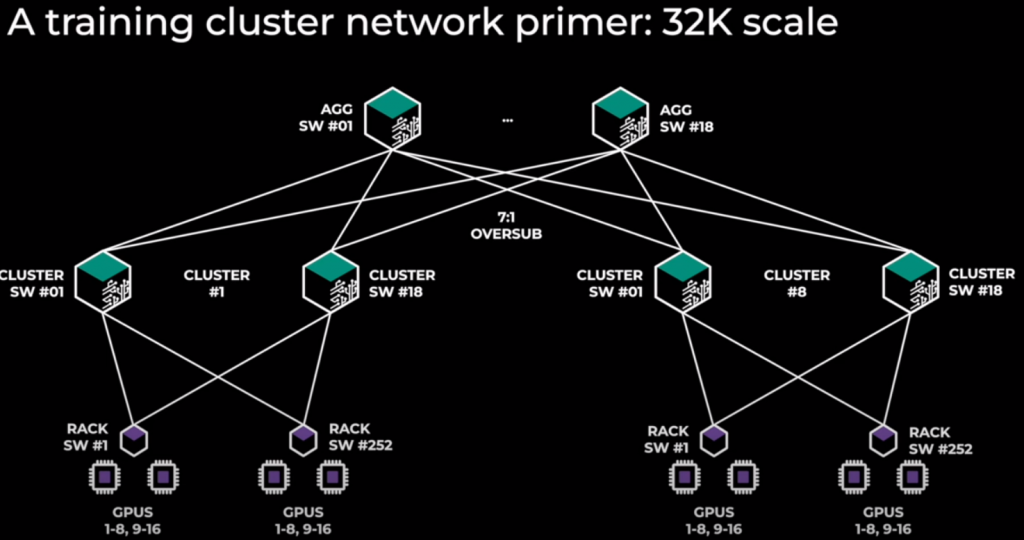

AI/HPC Networking: Nice summery about AI vs HPC, and what each hyperscaler and vendors are doing. For me is quite interesting how to get proper loadbalacing of flows like AWS SDR. This should be an actual standard by any network vendor or software to aim to that goal. I guess it is not easy.

High performance requirements can create a vendor lock-in. Doesn’t matter if it is IB or Ethernet. So pick your evil.

Spray ML/AI worloads: Based on the above regarding the loadbalacing, this is an interesting article about how to generate loadbalancing in ML workloads when it is based in just one elephant flow. So you need Adaptive routing in your fabric/switches, NICs that support it and support from your code/library.