I watched this week some interesting videos from NVIDA GTC related to networking. And it is a pain that you need to use a “work” email to register….

- S51839 – Designing the Next Generation of AI Systems:

— A quick summary, it seems any HPC networks needs to use InfiniBand… NVIDA has solution for all sizes. They can provide a POD solution!!! All Cloud providers provide their services.

- S51112 – How to Design an AI Supercomputer for Fast Distributed Training, and its Use Cases:

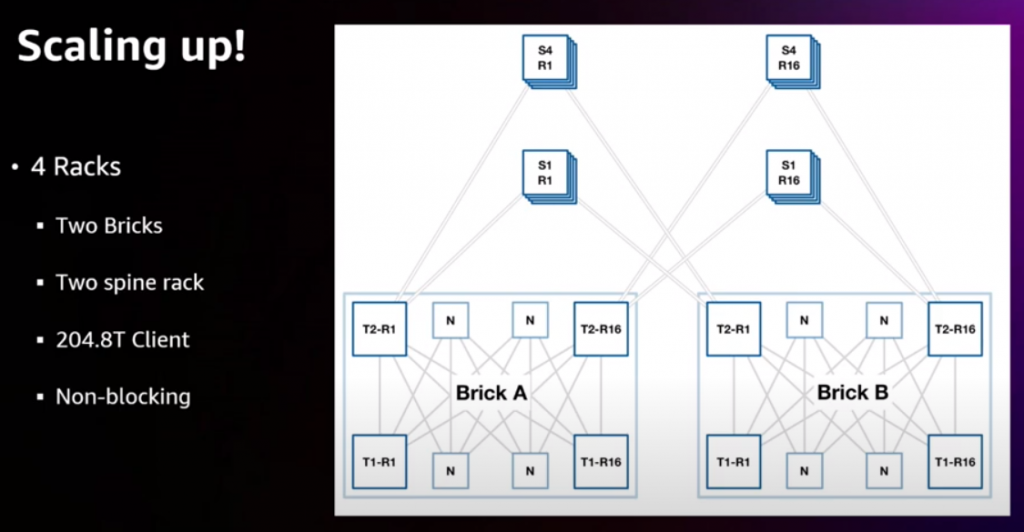

— Very interesting talk from NEC Japan. They built a network based on Ethernet switches for HPC with GPUs (and not IB as seen in the other video). As well they are heavy in RDMA/ROCEv2. And seems they have dedicated ports in the network for storage, management, etc. They are very happy with Cumulus/Linux as NOS.

- S51339 – Hit the Ground Running with Data Center Digital Twin Automation:

— Interesting tool NVIDIA air for creating labs. I expected in the demonstration to show off and built a huge network. “Digital Twin” looks like the new buzzword in the network automation world.

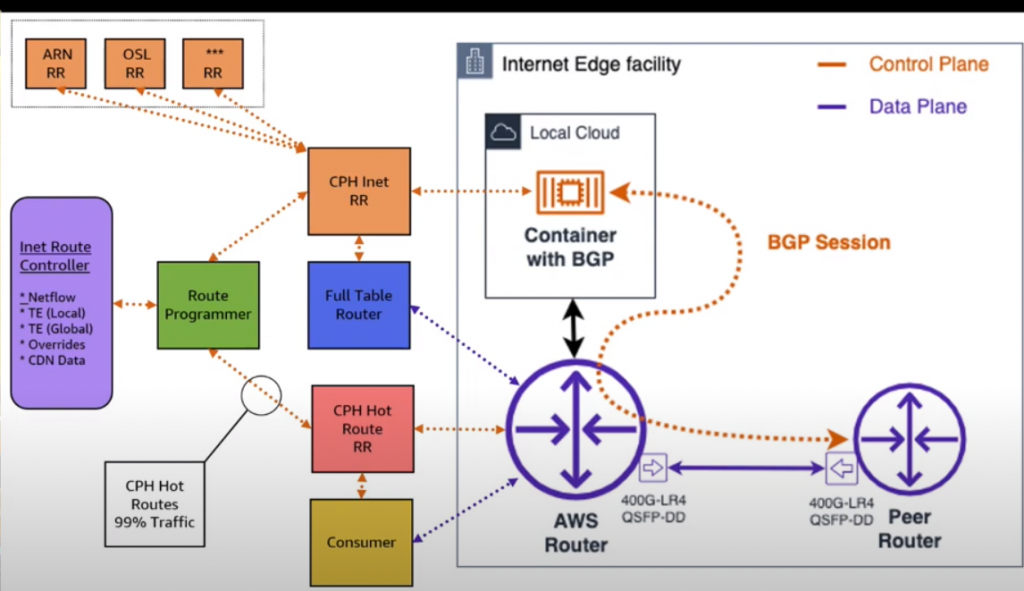

- S51751 – Powering Telco Cloud Services with Open Accelerated Ethernet:

— This is from COMCAST. And it is very interesting how “big” looks like SONIC is becoming. And NVIDIA is the second contributor to SONIC after M$! I need to try SONIC at some point.

- S51204 – Transforming Clouds to Cloud-Native Supercomputing Best Practices with Microsoft Azure:

— Obviously, building NVIDIA based supercomputers in M$ Azure. Again, all infiniband.

And another thing, the Spectrum-4 switch looks insane.