Finally I am trying to setup MPLS L3VPN.

Again, I am following the author post but adapting it to my environment using libvirt instead of VirtualBox and Debian10 as VM. All my data is here.

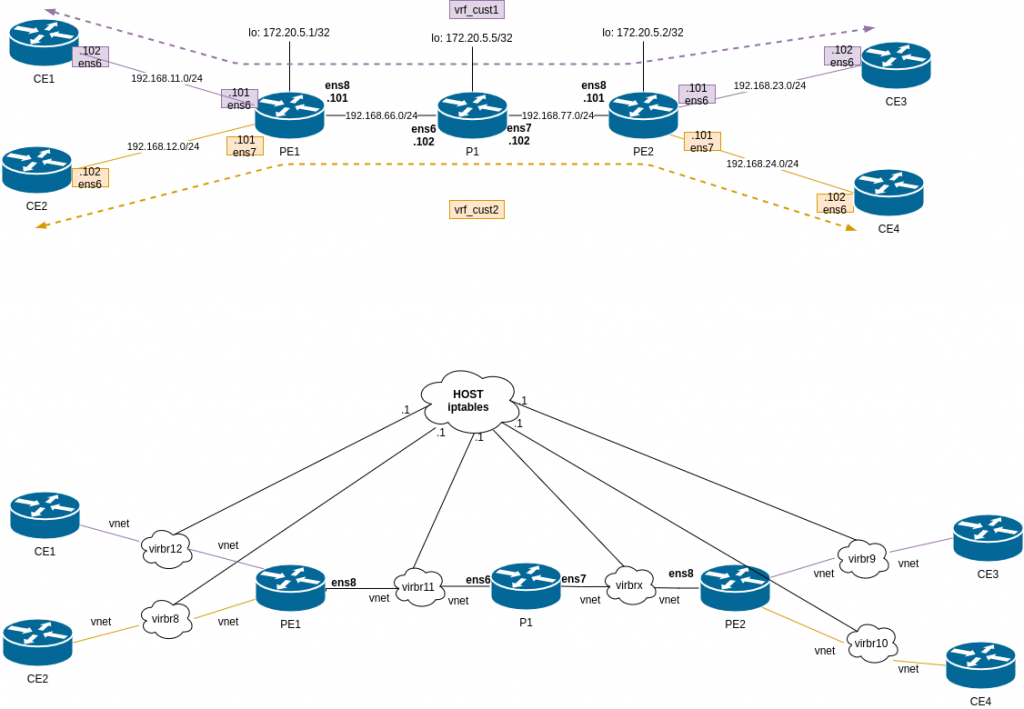

This is the diagram for the lab:

Difference from lab3 and lab2. We have P1, that is a pure P router, only handling labels, it doesnt do any BGP.

This time all devices FRR config are generated automatically via gen_frr_config.py (in lab2 all config was manual).

Again the environment is configured via Vagrant file + l3vpn_provisioning script. This is mix of lab2 (install FRR), lab3 (define VRFs) and lab1 (configure MPLS at linux level).

So after some tuning, everything is installed, routing looks correct (although I dont know why but I have to reload FRR to get the proper generated BGP config in PE1 and PE2. P1 is fine).

So let’s see PE1:

IGP (IS-IS) is up:

PE1# show isis neighbor Area ISIS: System Id Interface L State Holdtime SNPA P1 ens8 2 Up 30 2020.2020.2020 PE1# PE1# exit root@PE1:/home/vagrant#

BGP is up to PE2 and we can see routes received in AF IPv4VPN:

PE1# PE1# show bgp summary IPv4 Unicast Summary: BGP router identifier 172.20.5.1, local AS number 65010 vrf-id 0 BGP table version 0 RIB entries 0, using 0 bytes of memory Peers 1, using 21 KiB of memory Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt 172.20.5.2 4 65010 111 105 0 0 0 01:39:14 0 0 Total number of neighbors 1 IPv4 VPN Summary: BGP router identifier 172.20.5.1, local AS number 65010 vrf-id 0 BGP table version 0 RIB entries 11, using 2112 bytes of memory Peers 1, using 21 KiB of memory Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt 172.20.5.2 4 65010 111 105 0 0 0 01:39:14 2 2 Total number of neighbors 1 PE1#

Check routing tables, we can see prefixes in both VRFs, so that’s good. And the labels needed.

PE1# show ip route vrf all

Codes: K - kernel route, C - connected, S - static, R - RIP,

O - OSPF, I - IS-IS, B - BGP, E - EIGRP, N - NHRP,

T - Table, v - VNC, V - VNC-Direct, A - Babel, D - SHARP,

F - PBR, f - OpenFabric,

> - selected route, * - FIB route, q - queued, r - rejected, b - backup

VRF default:

C>* 172.20.5.1/32 is directly connected, lo, 02:19:16

I>* 172.20.5.2/32 [115/30] via 192.168.66.102, ens8, label 17, weight 1, 02:16:10

I>* 172.20.5.5/32 [115/20] via 192.168.66.102, ens8, label implicit-null, weight 1, 02:18:34

I 192.168.66.0/24 [115/20] via 192.168.66.102, ens8 inactive, weight 1, 02:18:34

C>* 192.168.66.0/24 is directly connected, ens8, 02:19:16

I>* 192.168.77.0/24 [115/20] via 192.168.66.102, ens8, label implicit-null, weight 1, 02:18:34

C>* 192.168.121.0/24 is directly connected, ens5, 02:19:16

K>* 192.168.121.1/32 [0/1024] is directly connected, ens5, 02:19:16

VRF vrf_cust1:

C>* 192.168.11.0/24 is directly connected, ens6, 02:19:05

B> 192.168.23.0/24 [200/0] via 172.20.5.2 (vrf default) (recursive), label 80, weight 1, 02:13:32

via 192.168.66.102, ens8 (vrf default), label 17/80, weight 1, 02:13:32

VRF vrf_cust2:

C>* 192.168.12.0/24 is directly connected, ens7, 02:19:05

B> 192.168.24.0/24 [200/0] via 172.20.5.2 (vrf default) (recursive), label 81, weight 1, 02:13:32

via 192.168.66.102, ens8 (vrf default), label 17/81, weight 1, 02:13:32

PE1#

Now check LDP and MPLS labels. Everything looks sane. We have LDP labels for P1 (17) and PE2 (18). And labels for each VFR.

PE1# show mpls table Inbound Label Type Nexthop Outbound Label 16 LDP 192.168.66.102 implicit-null 17 LDP 192.168.66.102 implicit-null 18 LDP 192.168.66.102 17 80 BGP vrf_cust1 - 81 BGP vrf_cust2 - PE1# PE1# show mpls ldp neighbor AF ID State Remote Address Uptime ipv4 172.20.5.5 OPERATIONAL 172.20.5.5 02:20:20 PE1# PE1# PE1# show mpls ldp binding AF Destination Nexthop Local Label Remote Label In Use ipv4 172.20.5.1/32 172.20.5.5 imp-null 16 no ipv4 172.20.5.2/32 172.20.5.5 18 17 yes ipv4 172.20.5.5/32 172.20.5.5 16 imp-null yes ipv4 192.168.11.0/24 0.0.0.0 imp-null - no ipv4 192.168.12.0/24 0.0.0.0 imp-null - no ipv4 192.168.66.0/24 172.20.5.5 imp-null imp-null no ipv4 192.168.77.0/24 172.20.5.5 17 imp-null yes ipv4 192.168.121.0/24 172.20.5.5 imp-null imp-null no PE1#

Similar view happens in PE2.

From P1 that is our P router. We only care about LDP and ISIS

P1#

P1# show mpls table

Inbound Label Type Nexthop Outbound Label

16 LDP 192.168.66.101 implicit-null

17 LDP 192.168.77.101 implicit-null

P1# show mpls ldp neighbor

AF ID State Remote Address Uptime

ipv4 172.20.5.1 OPERATIONAL 172.20.5.1 02:23:55

ipv4 172.20.5.2 OPERATIONAL 172.20.5.2 02:21:01

P1#

P1# show isis neighbor

Area ISIS:

System Id Interface L State Holdtime SNPA

PE1 ens6 2 Up 28 2020.2020.2020

PE2 ens7 2 Up 29 2020.2020.2020

P1#

P1# show ip route

Codes: K - kernel route, C - connected, S - static, R - RIP,

O - OSPF, I - IS-IS, B - BGP, E - EIGRP, N - NHRP,

T - Table, v - VNC, V - VNC-Direct, A - Babel, D - SHARP,

F - PBR, f - OpenFabric,

> - selected route, * - FIB route, q - queued, r - rejected, b - backup

K>* 0.0.0.0/0 [0/1024] via 192.168.121.1, ens5, src 192.168.121.253, 02:24:45

I>* 172.20.5.1/32 [115/20] via 192.168.66.101, ens6, label implicit-null, weight 1, 02:24:04

I>* 172.20.5.2/32 [115/20] via 192.168.77.101, ens7, label implicit-null, weight 1, 02:21:39

C>* 172.20.5.5/32 is directly connected, lo, 02:24:45

I 192.168.66.0/24 [115/20] via 192.168.66.101, ens6 inactive, weight 1, 02:24:04

C>* 192.168.66.0/24 is directly connected, ens6, 02:24:45

I 192.168.77.0/24 [115/20] via 192.168.77.101, ens7 inactive, weight 1, 02:21:39

C>* 192.168.77.0/24 is directly connected, ens7, 02:24:45

C>* 192.168.121.0/24 is directly connected, ens5, 02:24:45

K>* 192.168.121.1/32 [0/1024] is directly connected, ens5, 02:24:45

P1#

So as usual, let’s try to test connectivity. Will ping from CE1 (connected to PE1) to CE3 (connected to PE2) that belong to the same VRF vrf_cust1.

First of all, I had to modify iptables in my host to avoid unnecessary NAT (iptables masquerade) between CE1 and CE3.

# iptables -t nat -vnL LIBVIRT_PRT --line-numbers Chain LIBVIRT_PRT (1 references) num pkts bytes target prot opt in out source destination 1 15 1451 RETURN all -- * * 192.168.77.0/24 224.0.0.0/24 2 0 0 RETURN all -- * * 192.168.77.0/24 255.255.255.255 3 0 0 MASQUERADE tcp -- * * 192.168.77.0/24 !192.168.77.0/24 masq ports: 1024-65535 4 18 3476 MASQUERADE udp -- * * 192.168.77.0/24 !192.168.77.0/24 masq ports: 1024-65535 5 0 0 MASQUERADE all -- * * 192.168.77.0/24 !192.168.77.0/24 6 13 1754 RETURN all -- * * 192.168.122.0/24 224.0.0.0/24 7 0 0 RETURN all -- * * 192.168.122.0/24 255.255.255.255 8 0 0 MASQUERADE tcp -- * * 192.168.122.0/24 !192.168.122.0/24 masq ports: 1024-65535 9 0 0 MASQUERADE udp -- * * 192.168.122.0/24 !192.168.122.0/24 masq ports: 1024-65535 10 0 0 MASQUERADE all -- * * 192.168.122.0/24 !192.168.122.0/24 11 24 2301 RETURN all -- * * 192.168.11.0/24 224.0.0.0/24 12 0 0 RETURN all -- * * 192.168.11.0/24 255.255.255.255 13 0 0 MASQUERADE tcp -- * * 192.168.11.0/24 !192.168.11.0/24 masq ports: 1024-65535 14 23 4476 MASQUERADE udp -- * * 192.168.11.0/24 !192.168.11.0/24 masq ports: 1024-65535 15 1 84 MASQUERADE all -- * * 192.168.11.0/24 !192.168.11.0/24 16 29 2541 RETURN all -- * * 192.168.121.0/24 224.0.0.0/24 17 0 0 RETURN all -- * * 192.168.121.0/24 255.255.255.255 18 36 2160 MASQUERADE tcp -- * * 192.168.121.0/24 !192.168.121.0/24 masq ports: 1024-65535 19 65 7792 MASQUERADE udp -- * * 192.168.121.0/24 !192.168.121.0/24 masq ports: 1024-65535 20 0 0 MASQUERADE all -- * * 192.168.121.0/24 !192.168.121.0/24 21 20 2119 RETURN all -- * * 192.168.24.0/24 224.0.0.0/24 22 0 0 RETURN all -- * * 192.168.24.0/24 255.255.255.255 23 0 0 MASQUERADE tcp -- * * 192.168.24.0/24 !192.168.24.0/24 masq ports: 1024-65535 24 21 4076 MASQUERADE udp -- * * 192.168.24.0/24 !192.168.24.0/24 masq ports: 1024-65535 25 0 0 MASQUERADE all -- * * 192.168.24.0/24 !192.168.24.0/24 26 20 2119 RETURN all -- * * 192.168.23.0/24 224.0.0.0/24 27 0 0 RETURN all -- * * 192.168.23.0/24 255.255.255.255 28 1 60 MASQUERADE tcp -- * * 192.168.23.0/24 !192.168.23.0/24 masq ports: 1024-65535 29 20 3876 MASQUERADE udp -- * * 192.168.23.0/24 !192.168.23.0/24 masq ports: 1024-65535 30 1 84 MASQUERADE all -- * * 192.168.23.0/24 !192.168.23.0/24 31 25 2389 RETURN all -- * * 192.168.66.0/24 224.0.0.0/24 32 0 0 RETURN all -- * * 192.168.66.0/24 255.255.255.255 33 0 0 MASQUERADE tcp -- * * 192.168.66.0/24 !192.168.66.0/24 masq ports: 1024-65535 34 23 4476 MASQUERADE udp -- * * 192.168.66.0/24 !192.168.66.0/24 masq ports: 1024-65535 35 0 0 MASQUERADE all -- * * 192.168.66.0/24 !192.168.66.0/24 36 24 2298 RETURN all -- * * 192.168.12.0/24 224.0.0.0/24 37 0 0 RETURN all -- * * 192.168.12.0/24 255.255.255.255 38 0 0 MASQUERADE tcp -- * * 192.168.12.0/24 !192.168.12.0/24 masq ports: 1024-65535 39 23 4476 MASQUERADE udp -- * * 192.168.12.0/24 !192.168.12.0/24 masq ports: 1024-65535 40 0 0 MASQUERADE all -- * * 192.168.12.0/24 !192.168.12.0/24 # # iptables -t nat -I LIBVIRT_PRT 13 -s 192.168.11.0/24 -d 192.168.23.0/24 -j RETURN # iptables -t nat -I LIBVIRT_PRT 29 -s 192.168.23.0/24 -d 192.168.11.0/24 -j RETURN

Ok, staring pinging from CE1 to CE3:

vagrant@CE1:~$ ping 192.168.23.102 PING 192.168.23.102 (192.168.23.102) 56(84) bytes of data.

No good. Let’s check what the next hop, PE1, is doing. It seem it is sending the traffic double encapsulated to P1 as expected

root@PE1:/home/vagrant# tcpdump -i ens8 ... 20:29:16.648325 MPLS (label 17, exp 0, ttl 63) (label 80, exp 0, [S], ttl 63) IP 192.168.11.102 > 192.168.23.102: ICMP echo request, id 2298, seq 2627, length 64 20:29:17.672287 MPLS (label 17, exp 0, ttl 63) (label 80, exp 0, [S], ttl 63) IP 192.168.11.102 > 192.168.23.102: ICMP echo request, id 2298, seq 2628, length 64 ...

Let’s check next hop, P1. I can see it is sending the traffic to PE2 doing PHP, so removing the top label (LDP) and only leaving the BGP label:

root@PE2:/home/vagrant# tcpdump -i ens8 ... 20:29:16.648176 MPLS (label 80, exp 0, [S], ttl 63) IP 192.168.11.102 > 192.168.23.102: ICMP echo request, id 2298, seq 2627, length 64 20:29:17.671968 MPLS (label 80, exp 0, [S], ttl 63) IP 192.168.11.102 > 192.168.23.102: ICMP echo request, id 2298, seq 2628, length 64 ...

But then PE2 is not sending anything to CE3. I can’t see anything in the links:

root@CE3:/home/vagrant# tcpdump -i ens6 tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on ens6, link-type EN10MB (Ethernet), capture size 262144 bytes 20:32:03.174796 STP 802.1d, Config, Flags [none], bridge-id 8000.52:54:00:e2:cb:54.8001, length 35 20:32:05.158761 STP 802.1d, Config, Flags [none], bridge-id 8000.52:54:00:e2:cb:54.8001, length 35 20:32:07.174742 STP 802.1d, Config, Flags [none], bridge-id 8000.52:54:00:e2:cb:54.8001, length 35

I have double-checked the configs. All routing and config looks sane in PE2:

vagrant@PE2:~$ ip route

default via 192.168.121.1 dev ens5 proto dhcp src 192.168.121.31 metric 1024

172.20.5.1 encap mpls 16 via 192.168.77.102 dev ens8 proto isis metric 20

172.20.5.5 via 192.168.77.102 dev ens8 proto isis metric 20

192.168.66.0/24 via 192.168.77.102 dev ens8 proto isis metric 20

192.168.77.0/24 dev ens8 proto kernel scope link src 192.168.77.101

192.168.121.0/24 dev ens5 proto kernel scope link src 192.168.121.31

192.168.121.1 dev ens5 proto dhcp scope link src 192.168.121.31 metric 1024

vagrant@PE2:~$

vagrant@PE2:~$ ip -4 a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet 172.20.5.2/32 scope global lo

valid_lft forever preferred_lft forever

2: ens5: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

inet 192.168.121.31/24 brd 192.168.121.255 scope global dynamic ens5

valid_lft 2524sec preferred_lft 2524sec

3: ens6: mtu 1500 qdisc pfifo_fast master vrf_cust1 state UP group default qlen 1000

inet 192.168.23.101/24 brd 192.168.23.255 scope global ens6

valid_lft forever preferred_lft forever

4: ens7: mtu 1500 qdisc pfifo_fast master vrf_cust2 state UP group default qlen 1000

inet 192.168.24.101/24 brd 192.168.24.255 scope global ens7

valid_lft forever preferred_lft forever

5: ens8: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

inet 192.168.77.101/24 brd 192.168.77.255 scope global ens8

valid_lft forever preferred_lft forever

vagrant@PE2:~$

vagrant@PE2:~$

vagrant@PE2:~$

vagrant@PE2:~$

vagrant@PE2:~$ ip -M route

16 as to 16 via inet 192.168.77.102 dev ens8 proto ldp

17 via inet 192.168.77.102 dev ens8 proto ldp

18 via inet 192.168.77.102 dev ens8 proto ldp

vagrant@PE2:~$

vagrant@PE2:~$ ip route show table 10

blackhole default

192.168.11.0/24 encap mpls 16/80 via 192.168.77.102 dev ens8 proto bgp metric 20

broadcast 192.168.23.0 dev ens6 proto kernel scope link src 192.168.23.101

192.168.23.0/24 dev ens6 proto kernel scope link src 192.168.23.101

local 192.168.23.101 dev ens6 proto kernel scope host src 192.168.23.101

broadcast 192.168.23.255 dev ens6 proto kernel scope link src 192.168.23.101

vagrant@PE2:~$

vagrant@PE2:~$

vagrant@PE2:~$ ip vrf

Name Table

vrf_cust1 10

vrf_cust2 20

vagrant@PE2:~$

root@PE2:/home/vagrant# sysctl -a | grep mpls

net.mpls.conf.ens5.input = 0

net.mpls.conf.ens6.input = 0

net.mpls.conf.ens7.input = 0

net.mpls.conf.ens8.input = 1

net.mpls.conf.lo.input = 0

net.mpls.conf.vrf_cust1.input = 0

net.mpls.conf.vrf_cust2.input = 0

net.mpls.default_ttl = 255

net.mpls.ip_ttl_propagate = 1

net.mpls.platform_labels = 100000

root@PE2:/home/vagrant#

root@PE2:/home/vagrant# lsmod | grep mpls

mpls_iptunnel 16384 3

mpls_router 36864 1 mpls_iptunnel

ip_tunnel 24576 1 mpls_router

root@PE2:/home/vagrant#

So I am a bit puzzled the last couple of weeks about this issue. I was thinking that iptables was fooling me again and was dropping the traffic somehow but as far as I can see. PE2 is not sending anything and I dont really know how to troubleshoot FRR in this case. I have asked for help in the FRR list. Let’s see how it goes. I think I am doing something wrong because I am not doing anything new.