I watched very interesting videos about AWS networking. They are high level, so they dont tell you the magic sauce you would like to know but it is nice that this info is out in the public.

— Evolution from buying chassis to building your own devices: consume -> create (NOC-less, auto-remediation, active telemetry, etc)-> innovate (freedom to examine trade-offs, 1U devices). Clearly use of “Clos” networks and they linux-based software.

— Delighted: low complexity + high innovation

— Simplicity Scales

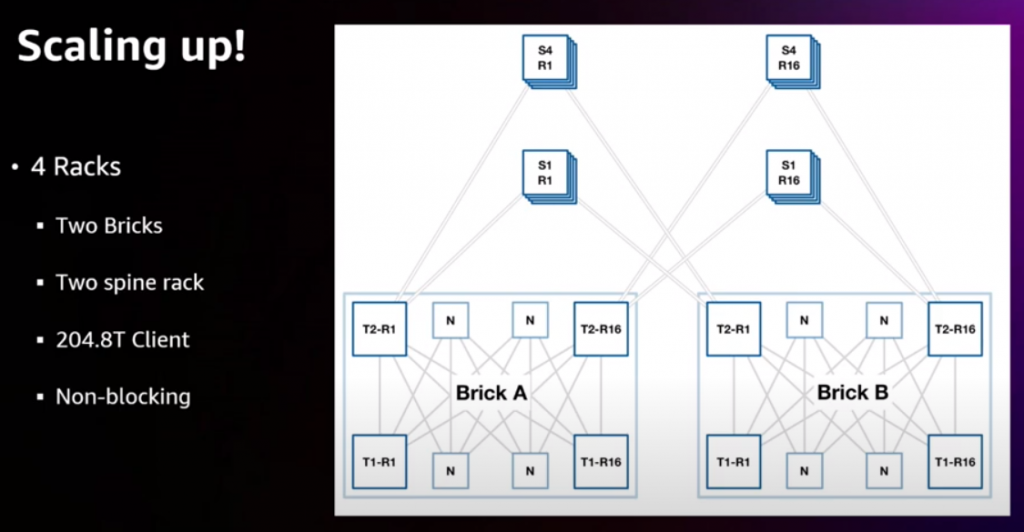

— It is interesting the view of a router/brick like a set of 1U devices (rack 102.8T – 200x400G ports for customers, non-blocking). An it is very good they have pictures of the concept of “bricks” and “spines”.

— Challenges with cabling (SN connector — no patching rack needed) and 400G ZR+ (heating!)

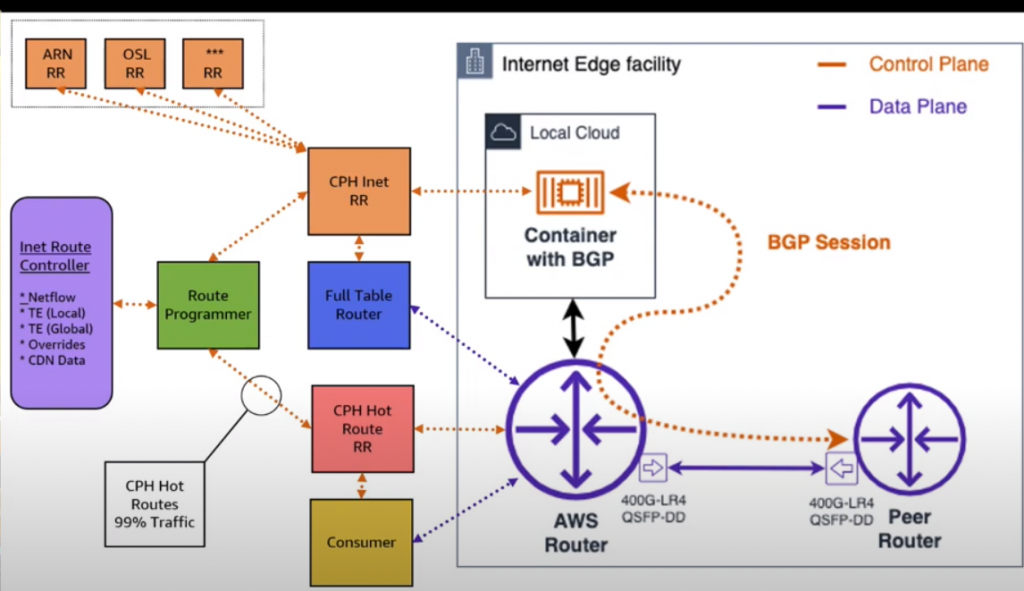

— BGP peering is actually with a container:

— James Hamilton paper – link + pdf

- AWS re:Invent 2022 – Dive deep on AWS networking infrastructure (NET402)– link

— summary: This is “similar” to the DKNOG but with longer and some other details like:

— “We dont like chassis”. 1+million devices

— SDR at NIC level so one TCP flow is actually load-balanced in several paths

— Hybrid SDN approach: You have controllers to give you a big picture view (I guess it provides the visibility to say “just send 70% traffic to this device” – but not sure how) and the own device device capability to deal with changes.

— Telemetry, continuous monitoring, triangulation: Be able to detect the port/device is causing the problem.

- AWS re:Invent 2022 – Leaping ahead: The power of cloud network innovation (NET211-L) – link:

— AWS Global Infrastructure: Backbone capacity

— Customer SW/HW

— Everything fails all the time

— GPS locations in fibers! + inject light in fiber to double check fault -> intelligent optical routing/failover -> better than BGP….

— Termite sheet fibers for Australia 🙂

— Nitro card = NIC (offload card)

— SDR: not need in-order packet deliver as required by TCP. 25Gbps flows allowed now.