Read 1. Meta to build its own AI chips. Currently using 16k A100 GPU (Google using 26k H100 GPU). And it seems Graphcore had some issues in 2020.

Read 2. Didnt know Colovore, interesting to see how critical is actually power/cooling with all the hype in AI and power constrains in key regions (Ashburn VA…) And with proper water cooling you can have a 200kw rack! And seems they have the same power as a 6x bigger facility. Cost of cooling via water is cheaper than air-cooled.

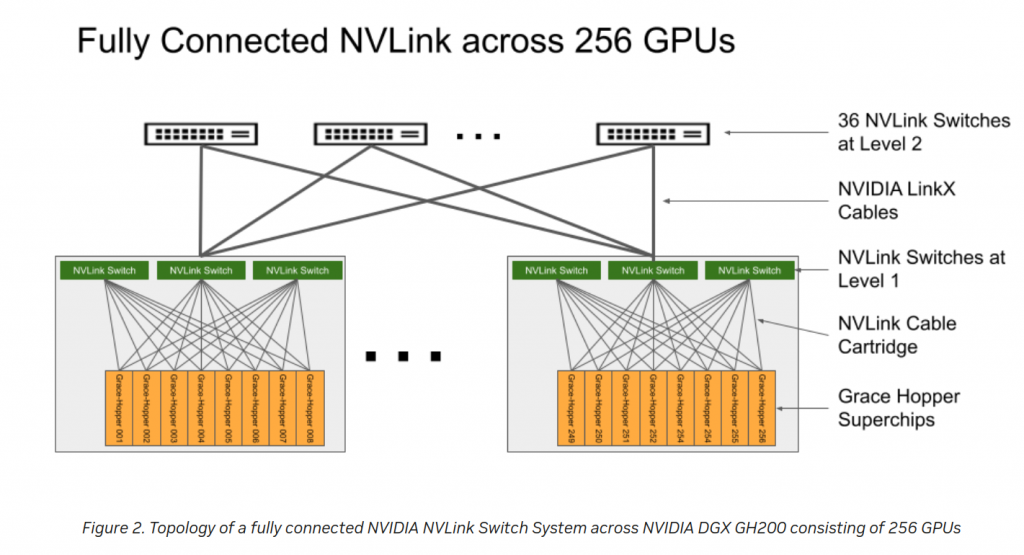

Read 3. Google one of biggest NVIDIA GPU customer although they built TPUv4. MS uses 10k A100 GPU for training GPT4. 25k for GPT5 (mix of A100 and H100?) For customer, MS offers AI supercomputer based on H100, 400G infiniband quantum2 switches and ConnectX-7 NICs: 4k GPU. Google has A3 GPU instanced treated like supercomputers and uses “Apollo” optical circuit switching (OCS). “The OCS layer replaces the spine layer in a leaf/spine Clos topology” -> interesting to see what that means and looks like. As well, it uses NVSwitch for interconnect the GPUs memories to act like one. As well, they have their own (smart) NICS (DPU data processing units or infrastructure processing units IPU?) using P4. Google has its own “inter-server GPU communication stack” as well as NCCL optimizations (2016! post).

Read4: Via the P4 newletter. Since Intel bought Barefoot, I kind of assumed the product was nearly dead but visiting the page and checking this slides, it seems “alive”. Sonic+P4 are main players in Google SDN.

“Google has pioneered Software-Defined Networking (SDN) in data centers for over a decade. With the open sourcing of PINS (P4 Integrated Network Stack) two years ago, Google has ushered in a new model to remotely configure network switches. PINS brings in a P4Runtime application container to the SONiC architecture and supports extensions that make it easier for operators to realize the benefits of SDN. We look forward to enhancing the PINS capabilities and continue to support the P4 community in the future”

Read5: Slingshot is another switching technology coming from Cray supercomputers and trying to compete with Infiniband. A 2019 link that looks interesting too. Paper that I dont thik I will be able to read neither understand.

Read6: ISC High Performance 2023. I need to try to attend one of these events in the future. There are two interesting talks although I doubt they will provide any online video or slides.

– Talk1: Intro to Networking Technologies for HPC: “InfiniBand (IB), High-speed Ethernet (HSE), RoCE, Omni-Path, EFA, Tofu, and Slingshot technologies are generating a lot of excitement towards building next generation High-End Computing (HEC) systems including clusters, datacenters, file systems, storage, cloud computing and Big Data (Hadoop, Spark, HBase and Memcached) environments. This tutorial will provide an overview of these emerging technologies, their offered architectural features, their current market standing, and their suitability for designing HEC systems. It will start with a brief overview of IB, HSE, RoCE, Omni-Path, EFA, Tofu, and Slingshot. In-depth overview of the architectural features of IB, HSE (including iWARP and RoCE), and Omni-Path, their similarities and differences, and the associated protocols will be presented. An overview of the emerging NVLink, NVLink2, NVSwitch, Slingshot, Tofu architectures will also be given. Next, an overview of the OpenFabrics stack which encapsulates IB, HSE, and RoCE (v1/v2) in a unified manner will be presented. An overview of libfabrics stack will also be provided. Hardware/software solutions and the market trends behind these networking technologies will be highlighted. Sample performance numbers of these technologies and protocols for different environments will be presented. Finally, hands-on exercises will be carried out for the attendees to gain first-hand experience of running experiments with high-performance networks”

– Talk2: State-of-the-Art High Performance MPI Libraries and Slingshot Networking: “Many top supercomputers utilize InfiniBand networking across nodes to scale out performance. Underlying interconnect technology is a critical component in achieving high performance, low latency and high throughput, at scale on next-generation exascale systems. The deployment of Slingshot networking for new exascale systems such as Frontier at OLCF and the upcoming El-Capitan at LLNL pose several challenges. State-of-the-art MPI libraries for GPU-aware and CPU-based communication should adapt to be optimized for Slingshot networking, particularly with support for the underlying HPE Cray fabric and adapter to have functionality over the Slingshot-11 interconnect. This poses a need for a thorough evaluation and understanding of slingshot networking with regards to MPI-level performance in order to provide efficient performance and scalability on exascale systems. In this work, we delve into a comprehensive evaluation on Slingshot-10 and Slingshot-11 networking with state-of-the-art MPI libraries and delve into the challenges this newer ecosystem poses.”

Read7: Slides and Video. I was aware of Dtrace (although never used it) so not sure how to compare with KUtrace. I guess I will ask Chat-GPT 🙂

Read8: Python as programming of choice for AI, ML, etc.

Read9: M$ “buying” energy from fusion reactors.